Part 4: Training the neural network

Welcome to Part 4 of our tutorial where we will be focused on training the neural network we built in the previous section

- Introduction

- Getting Started

- Transforming Kaggle Data and Convolutional Neural Networks (CNNs)

- Training our Neural Network (Current)

- Optimising our neural network

- Converting and Freezing our CNN

- Quanitising our CNN

- Compiling our CNN

- Running our code on the DPU

- Conclusion Part 1: Improving Convolutional Neural Networks: The weaknesses of the MNIST based datasets and tips for improving poor datasets

- Conclusion Part 2: Sign Language Recognition: Hand Object detection using R-CNN and YOLO

The Sign Language MNIST Github

Training and Inference phases

Before we get started training, we need to understand the overall flow of developing neural networks and how this relates to our hardware. Neural networks have two distinct stages:

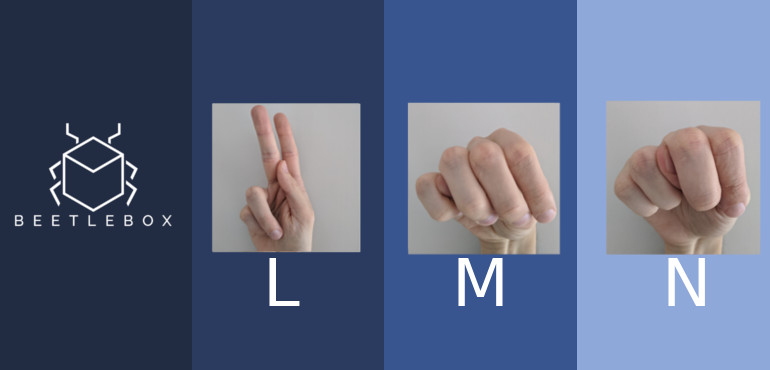

- Training phase: This is where the neural network undergoes a period of supervised learning in which we feed in the data with known labels. The CNN attempts to predict the label and using the results from that prediction, we strengthen or weaken the neuron connections through adjusting their weights. This is done in a process known as backpropagation, where once the network makes a prediction, we look backwards at all the results and find out what connections contributed most to an error, so that we can then accurately adjust those connection’s weights. We repeat training on the data several times and each repetition is known as an epoch. Through this training the CNN learns how to extract the general features of the image and make predictions based on these features. In our case, the CNN will need to learn how to distinguish fingers and how the position of those fingers relate to different signs.

- Inference phase: Once the training is complete, we freeze the CNN, locking the weights. At this point, the CNN has learned all the features it needs to extract and how to make predictions based on their features, so we no longer need to perform the computationally expensive training and can remove any code or layers associated with training. The inference phase is significantly faster and less memory intensive than the training phase as we are only performing ‘forward passes’ through the network, which means we only move forward from one layer to the next. We do not need to hold the results to look backwards anymore.

In fact, the reduction in memory usage is one of the main reasons why FPGAs are so good at the inference phase. FPGAs have always suffered from memory bandwidth issues, but if we can minimise the amount of memory transfers then we can overcome this weakness. We can split the two phases by hardware:

- Training phase: Large amounts of memory usage with the results of each layer needing to be saved, as well as large amounts of calculations that can be performed in batches. This makes GPUs with their parallel processing and large memory bandwidth, great at performing training. In our case, since our CNN is so small, we can get away with just using a CPU.

- Inference phase: No need to save results in between layers and also data may not be available in batches (such as when we are constantly inputting frames from a video). FPGAs are a great fit for this as they have limited bandwidth, but can work effectively on small amounts of data. Through the efficient hardware accelerators that Vitis AI uses, we can minimise memory movement whilst performing a high amount of calculations per second, which means we can outperform GPUs in terms of latency and power consumption. We also outperform CPUs in terms of throughput. This is not the only reason we want to use FPGAs for inference as other factors also increase our performance as we will explore in later tutorials.

Training

Now that we have our CNN in place, we need to train it. main.py handles both the building and training of our neural network. As before launch the docker image and run main.py:

python3 main.py

This will run our training. We use the following settings to compile our network:

LEARN_RATE=0.0001 DECAY_RATE=1e-6 ...

model.compile(loss='categorical_crossentropy', optimizer=optimizers.Adam(lr=LEARN_RATE, decay=DECAY_RATE), metrics=['accuracy'])

- Loss: The model’s output error. The comparison between the model’s prediction and the actual output, which we seek to minimise during training. categorical_crossentropy is a popular choice for one-hot encoding networks.

- Optimizer: The algorithm that is tweaking our weights according to our loss. Adam is an improved optimiser over older and more simple ones, such as Gradient Descent.

- LEARN_RATE: The rate at which the weights are changed or ‘learned.’ Higher learning rates means less time needed to train, but can also make results unstable. Most efficient training phases will lower the learning rate through the process, which we can achieve using the decay rate.

- DECAY_RATE: Each epoch of training, we can decrease the learn rate by a factor determined by the decay rate. Using decay rate, we can reduce the number of epochs we need, whilst not risking an unstable loss.

- metrics: Finally at the end of each epoch we want to be looking at the accuracy to determine how we are learning

BATCHSIZE=32 EPOCHS=3 ...

model.fit(train_data, train_label, batch_size=BATCHSIZE, shuffle=True, epochs=EPOCHS, validation_data=(val_data, val_label) )

For each training phase we do, we need to set some parameters:

- train_data, train_label: The training data and labels we created last time

- batch_size: Batch size determines the number of samples we use to find the errors in the connections before we update the weights. Higher batch sizes make more efficient use of GPUs as we are able to feed in more parallel data, but can lead to instability.

- shuffle: If we train data in the exact same order each epoch, the training is likely to become ‘stuck’ in the same neural network solution. By shuffling the data, we can prevent getting stuck in a single solution and find a more optimal one.

- epochs: The number of times we repeat training

- validation_data: The validation data and labels that we created last tutorial

Once we are done training, we need to evaluate the accuracy on the testing data:

scores = model.evaluate(testing_data, testing_label, batch_size=BATCHSIZE)

Train on 23455 samples, validate on 4000 samples Epoch 1/3 23455/23455 [==============================] - 15s 631us/step - loss: 1.3695 - acc: 0.6072 - val_loss: 0.1170 - val_acc: 0.9862 Epoch 2/3 23455/23455 [==============================] - 14s 599us/step - loss: 0.2543 - acc: 0.9164 - val_loss: 0.0170 - val_acc: 0.9998 Epoch 3/3 23455/23455 [==============================] - 14s 590us/step - loss: 0.0929 - acc: 0.9720 - val_loss: 0.0034 - val_acc: 1.0000 7172/7172 [==============================] - 1s 99us/step Loss: 0.238 Accuracy: 0.922