From the early 2010s, the Internet of Things (IoT) has continued to grow. By 2030, it is estimated to reach up to $12.5 billion globally. Yet the way many teams develop has remained the same since developing in the 1990s, but CI and DevOps could change that.

As the demand for more sophisticated devices grows, but development time frames remain constant, engineers need new ways of ensuring product quality does not slip. The answer to this is through DevOps and Continuous Integration (CI).

DevOps has had a profound impact on software development and key to its success is the practice of CI. Through CI and DevOps teams can avoid months of delay due to integration problems.

CI introduces tight automation between teams that can help build and test entire systems rapidly. First, teams should look at how these techniques have impacted software development.

How DevOps conquered software development

In the early days of software development, tech companies were separated into two distinct categories. The Devs, or software developers, were responsible for the design and creation of software products, while the Ops, or IT operations, ensured that the products were stable and ran properly.

Around the 2007 period, developers noticed a problem: the people writing the code and the people deploying and supporting the product were completely different, creating dysfunctional teams.

The dysfunction can be described in the single classic sentence, ‘It works on my machine.’ Software developers were motivated to push out code that worked correctly on their setup, whilst those in IT need reliability and consistency, which would make them resilient to change. Bugs that were present in early development weren’t discovered until release.

To solve this problem the idea of DevOps was created. The idea was a set of practises, solutions and tools that would mean that both sets of professionals would take ownership of the entire lifecycle of their products from initial design to continued support and updates.

Companies wanted to move away from large, infrequent and stressful deployments to smaller more frequent updates that would only incrementally change functional code and that could be easily rolled back. This often fit well into Agile development practices that were becoming popular.

The idea caught on and is now widely used across the software industry. It is especially popular with developers that utilise Cloud technology, due to how quick it is to update, scale and monitor large amounts of products and services.

The key ingredient of CI

Any software product consists of a single source code that is often maintained by a version control system like Git. This code can then be hosted in a Git repository provider such as GitHub or GitLab.

Before the adoption of DevOps, developers would often have their own versions of the source code that they would then work on until a couple of months before the release date.

The entire development team would attempt to merge all this code into a single source code for release. This inevitably would cause ‘merge hell,’ where code clashes and bugs arose as systems attempted to interact with each other. Projects could be delayed by months to squash bugs and integrate properly.

One of the key requirements of DevOps is the ability to repeatedly build, test and integrate code changes from a developer into the main codebase of a project. This allows smaller more frequent updates that avoids merge hell. To meet this requirement, developers adopt the practise of continuous integration (CI).

CI is when code changes are automatically integrated into a software project. It encourages developers to push changes to the source code much more frequently, which could be several times a day.

This leads to another problem: How to ensure that developers aren’t pushing broken code that is going to bring down the central codebase if it runs. The answer to this is automation.

Simply put, before code is allowed to merge with the main code repository, it must pass a series of tests. These tests could be simple as ensuring that the code compiles properly or they could involve more complex tests such as systems tests, unit tests fuzzy testing or linting.

Using Continuous Integration, developers can avoid merge hell while also increasing their code quality through automated testing.

What is the IoT?

The Internet of Things was first coined in 1999 but did not really arrive until 2008. Since then it has made major strides in our everyday lives. In fact, the number of connected IoT devices is expected to grow to 16.7 billion devices globally.

In the early 2010s, everyday devices became more connected with the Cloud. This allowed users to control everyday objects, such as thermostats from the convenience of their mobile phones.

A more technical definition of an IoT device is an electronic device that is equipped with sensors, embedded systems and software that enables it to connect and communicate via networks, such as the internet. The most popular connective technologies are Wi-Fi, Bluetooth and cellular IoT (LTW, 4G, 5G, etc).

IoT devices range from industrial to consumer products. Consumers will be more familiar with IoT as smart home devices, such as smart speakers, TVs, thermostats, and lighting fixtures that can easily be controlled via a smartphone.

That does not mean that IoT devices are restricted to consumer products. Devices have also seen widespread adoption across industries from agricultural drones being able to target spray pesticides to predictive maintenance of industrial machinery.

The problem with the way IoT devices are developed

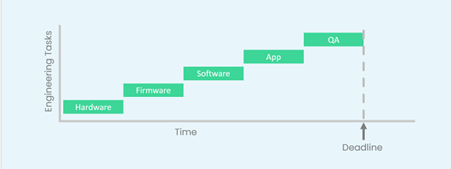

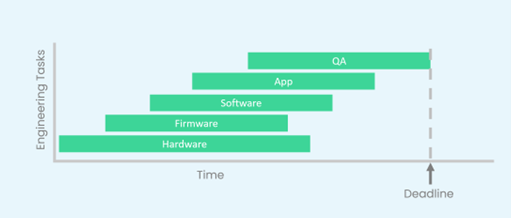

Developing an IoT system involves both hardware design and software development. As mentioned before all IoT devices will include an embedded system to process data and communicate with the network. Traditional embedded system development would involve a waterfall model that looks something like this:

A timeline showing the waterfall development model for embedded systems in IoT devices

Most teams are split into the distinct teams. Since each team is dependent on the other

- Hardware: The hardware team is responsible for the development of the PCB and any needed electronic components. They normally are the first team to begin because they decide what chips need to be used based on requirements. Moreover, software/firmware developers think they need the completed boards before they can start development.

- Firmware: The firmware engineers are responsible for the development of any OS or drivers that need to be placed on the device. Since software developers need an OS to develop on, they rely on the firmware engineers.

- Software: The embedded software developers produce the software that processes the data from the sensors and communicates with the network. They will be also responsible for providing the Cloud support needed to for the devices to communicate.

- App: The app team will be responsible for providing the interface for the user. This may be in the form of a mobile app or as a GUI on the device itself. They are reliant on all the other teams.

- QA: The quality assurance team will take the final system and test it to ensure it meets the requirements of the user. This is left till the end so all teams have the chance to finish their development.

The problem with this system is that inevitably things go wrong. Bugs are found. The user requirements change midway through the project. The supply of a component is lost. Factory lead times extend. Deadlines approach faster than expected.

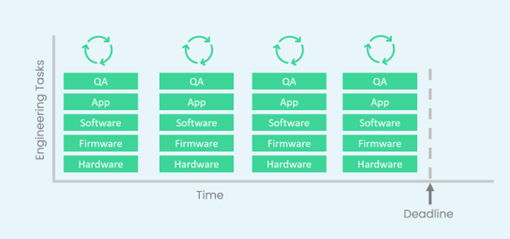

As a result, teams are delayed and cannot wait for each other, so they start developing before the other has finished and the schedule begins looking like this:

A more realistic model of the waterfall development model for embedded systems in IoT devices

This way of developing encourages siloed behaviour between teams. At the beginning of the project they agree on how each layer will communicate with each other, but will only test out how it forms a system in the last few weeks of the project.

This inevitably causes merge hell. System level bugs occur and requirements are not met. No customer wants to use the product and deadlines are pushed back months.

How DevOps solves IoT development problems

Instead of attempting a waterfall framework, adopting DevOps would allow us to use a more agile framework where the product is split into incremental stages.

For instance, if a team was developing an industrial IoT sensor for the predicative maintenance of a motor, we could break down the requirements until a Minimum Viable Product is found. It could be as simple as providing temperature sensors that the user is able to monitor from anywhere.

Each iteration, the system is fully designed, developed, tested and deployed to early users. Every iteration more requirements are added until an end product for customers is reached.

A DevOps model of development using Agile methodology for embedded systems in IoT devices.

Crucially, all teams work towards a functional system at the end of each iteration. This prevents merge hell at the end of development because merging pains are spread across the product.

Continuous Integration and the IoT

One of the major problems to solve is that integrating every level together into one system manually is incredibly time-consuming to be done. When adding manual testing, the process becomes almost impossible to run on time.

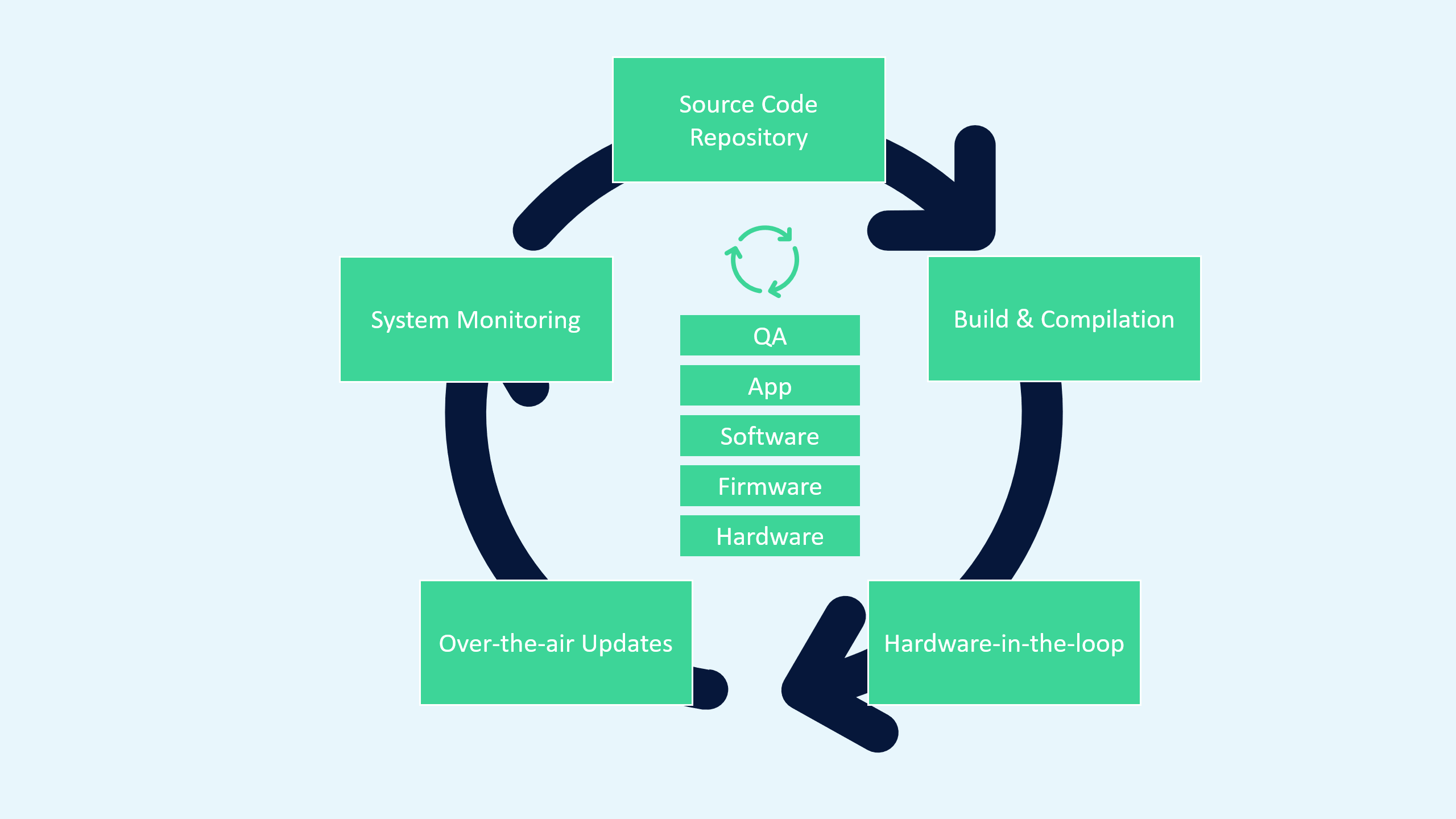

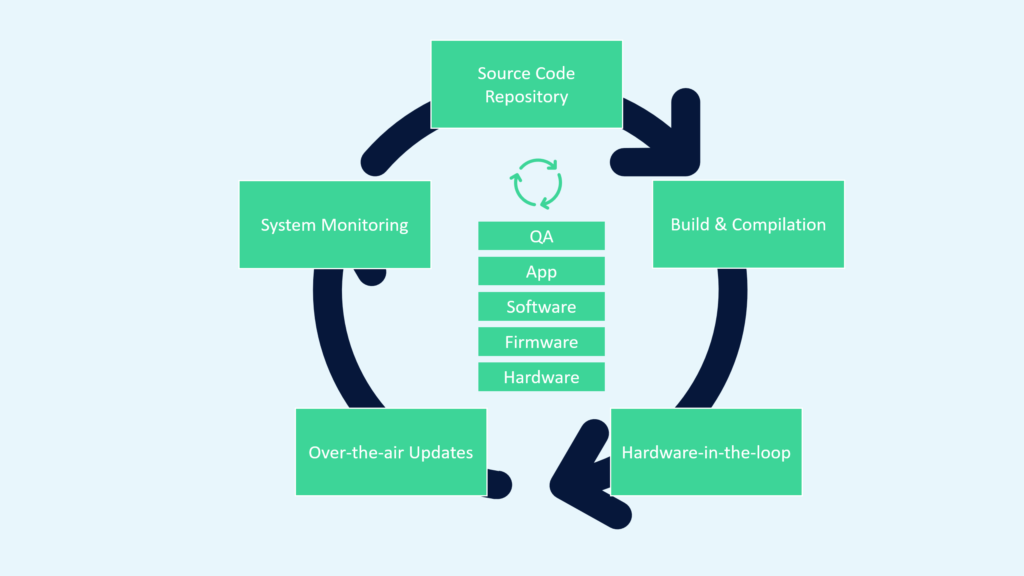

This is why automated testing via continuous integration is crucial. It allows cross team collaboration and for automatic system integration and testing. We can see a basic overview in the following diagram:

A continuous integration model for IoT development

In CI, all teams work together in a single source code repository which will contain everything from the firmware to the code used to set up the Cloud. On a regular basis, the code is built and compiled. The system can resolve each team’s dependency by building the first layer and using the files from that layer to build the layer above.

The built files can then be tested as regular software or via emulators. The files can also be used to update any Cloud infrastructure needed for the IoT devices.

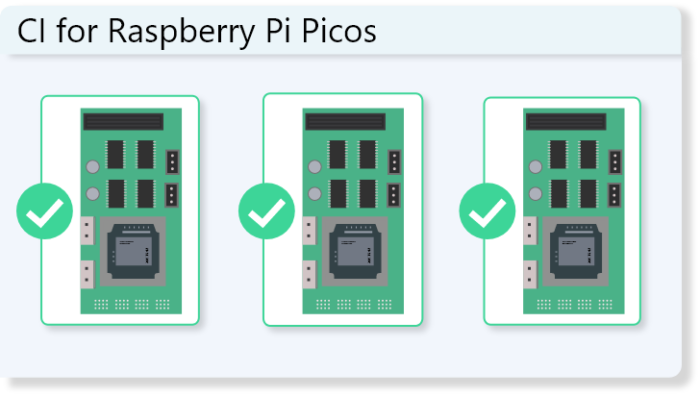

In the case of hardware, the engineers are reliant on batches of prototype boards. This does not mean continuous integration is not possible. Instead, teams can implement hardware-in-the-loop testing, where the prototype boards can be connected to the testing system as soon as possible.

If the boards are already deployed to customers, then software can be updated via over-the-air updates. Finally, the tests can also be used to run system monitoring to extract data from current devices to inform the next iteration.

Conclusion

In this article we have provided the fundamentals needed to understand how CI and DevOps can impact IoT development. CI provides the automation needed to rapidly test code come from every level of the technology stack. This is needed to ensure that properly DevOps practices are followed and that the development of the device is performed over regular increments rather than waiting until the end of the project.

DevOps helps solve the problem that older development models, such as waterfall, have where teams are siloed off from one another and only integrate their work at the very end. This causes system-level bugs to occur which can result in months of delays as they are resolved.

DevOps as an industry has rapidly developed within the software development space and through the techniques outlined it can have a large impact within the IoT. This is especially needed as the IoT continues to become more ubiquitous at both the industrial and consumer levels.