Often when we work with clients, we get a certain amount of resistance from embedded software engineers. This is to be expected. Adopting CI/CD is not just a matter of using new technologies but also introduces new practices with the firm. This can cause tension in any team. In this article, Ranjith Tharayil, highlights the objections he had to face when trying to put in Agile practices. These quotes perfectly represent the five greatest challenges of adopting CI and DevOps for the IoT. We want to challenge these notions and show how it is possible for any embedded development to adopt IoT.

“We cannot have potentially releasable functionality in less than four weeks”.

DevOps encourages the use of regular contributions and releases of code to customers. The entire purpose of Continuous Integration and automated testing is to enable developers to make regular contribute to the codebase without introducing new errors.

What happens in the case of firmware development though when it takes a few weeks to develop a single feature? Hardware is even worse. It could take 8 weeks solely to manufacture a new board. How can we release anything at four-week intervals?

Simply put, it is not possible to release new hardware to customers every four weeks. However, that does not mean you can’t aim for significant portions of the hardware design process to be performed within a four-week period. Instead of following a linear process of design, testing, sending to QA, and then releasing, DevOps can encourage a more cyclical approach. In the very first iteration, it is possible to create a design and automate the rules-checking and electrical simulations.

Additionally, during the very first iteration, embedded software developers may not be able to run their code on prototype hardware. However, they may use off-the-shelf components that function similarly to what is planned for the hardware. For instance, dev boards that use the same chipsets as the final project may act as a basic substitute.

Remember that client requirements can change fast. A client may believe they know their requirements, but their opinions may change once a device is put into practice. The sooner something is released to early customers or a QA team, the more valuable feedback can be collected for the next iteration of devices.

“We must develop first hardware than firmware and then applications”

This is another common complaint. After all, how could a software team build an application without first having an OS. Also to have an OS, there needs at least to be prototype hardware. However, this point misunderstands how IoT device development should work. Ultimately, with any IoT device, the user will be interacting with the software. The needs of the software should drive the hardware, not the other way around.

As an example of this, consider software benchmarks like MLPerf. MLPerf is an AI benchmark suite that can process inputs and produce results using a standard trained model. Hardware developers use these benchmarks to guide the development of hardware chips. There’s even a benchmark designed specifically for the tiny applications found on IoT devices..

Adopting a hardware-first attitude can overlook the needs of the user. The embedded software team will receive the most significant amount of feedback from early clients. The needs of the software should determine the choice of hardware.

“CI and automated testing doesn’t work in our context”

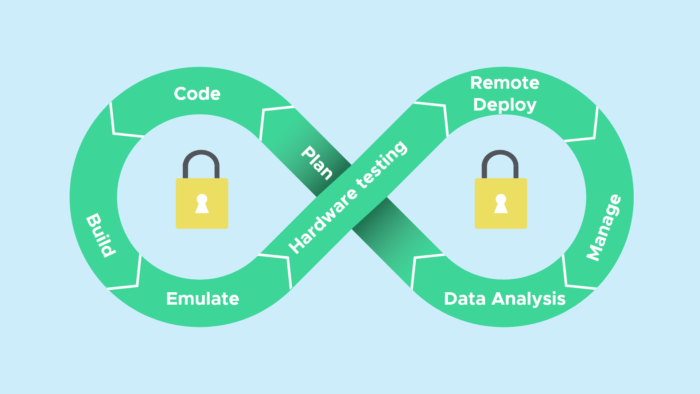

One of the most common beliefs we need to change is that an embedded engineer’s development process is so unique that it cannot be automated without months of investment. In fact, our own continuous integration software was designed to be as hardware-agnostic as possible, yet some people still don’t believe their devices can be automated.

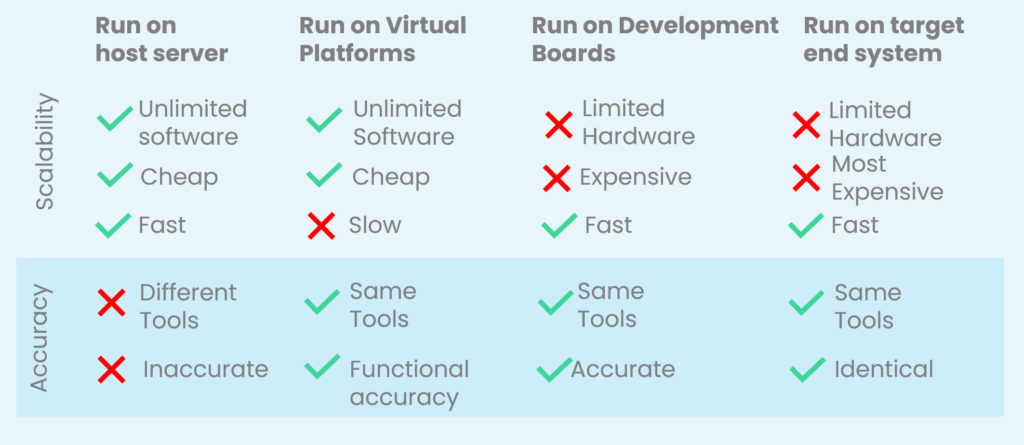

As shown above, there are various levels of testing that can occur, from running code on a host computer to testing code on the target embedded system. Each process can vary in terms of scalability or accuracy and which exact stages are needed for a project may vary.

- Run on a host server: This is when embedded software is built and tested on a host server, using the specific tools needed to run the code on the server. It scales well because we are unlikely to be constrained by the server’s resources, and we also don’t need to invest in expensive hardware. The problem though is that since the tools differ to what will be used for the embedded system, it can produce inaccurate results to what the end system will make.

- Run on a virtual platform. Instead of running the code directly on a host server, we can try to emulate the target end system on the server. This provides scalability whilst also using the same tools as the end target If the emulation is accurate, we should obtain functionally precise results. Often virtual platforms can be slow to run and take a lot of time to process.

- Run on development boards. Since prototype hardware tends to be ordered in batches and takes time to be developed, embedded engineers will not always have the end device available to them. Development boards provide a great option for embedded engineers to access the chipsets that will be used in the IoT device while the hardware is still in development. The problem is that ordering development boards in bulk can be expensive which limits the amount of tests that can be run on them.

- Run on target end system. As hardware development progresses, batches of test devices are created that can be used as part of the testing process. These are ideal for the embedded software developers, but are often limited and expensive to produce.

For CI not to work for a project, all these stages outlined would have to be impossible. In any project, there is a suitable combination of automated testing that can help reduce the development workload.

“We can’t reduce our lead times through CI “

In recent years, chip shortages have been plaguing the IoT. While the chip shortage seems to be easing, lead times still pose a significant challenge in development.

We cannot control our lead times, which is why DevOps becomes even more crucial. We must maximize the efficiency of the development time we do have control over.

To put it another way, imagine that an embedded software team currently has a few development boards shared among its members. A batch of prototype boards has been ordered, but it has been delayed by eight weeks.

During that time frame, it is crucial that the embedded software team maximizes the usage of their development boards. With effective CI, they can run tests during traditional downtimes, such as at night and on weekends.

While DevOps may not be able to change lead times, it can ensure that teams operate at maximum efficiency with the resources available to them.

“User stories are not for IoT devices”

This statement holds true in certain cases. Most agile practices will recommend building some sort of user story which is an informal way of explaining a feature from the point of view of an end user. For instance, in the case of designing an IoT vacuum cleaner, a user might want to remotely check the status of the filters on their mobile phone. In response, we create a user story for that.

However, in the context of certain features, this may not be as sensible. How do we write a user story about a safety requirement that must be met? The solution to this is that DevOps does not need to strictly adhere to Agile.

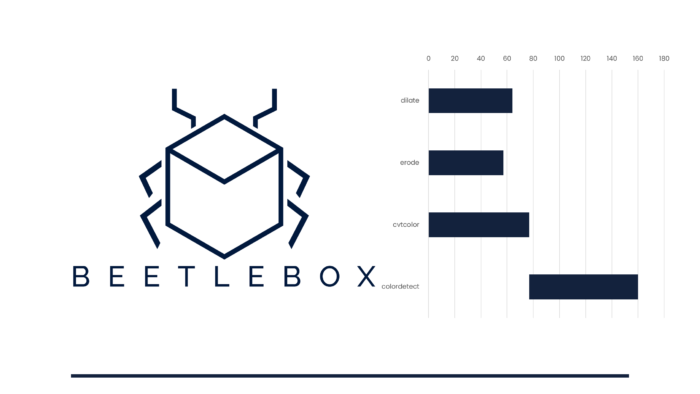

In fact, here at Beetlebox, we are strong proponents of the Agile V-model [https://aiotplaybook.org/index.php?title=Agile_V-Model], which incorporates both strict requirements and places emphasis on iterative-based development.

While user stories may not be the optimal choice for certain features, it doesn’t imply that teams cannot benefit from DevOps. Instead, the key is to discover the development process that suits your team.

Conclusion

Change can be intimidating, especially when the concepts of DevOps or CI are new to a team. This will naturally create resistance and concerns among the team. Just remember when a team expresses these concerns.

- “We cannot have potentially releasable functionality in less than four weeks”: Since hardware cannot be released in four weeks, it doesn’t mean we cannot utilize off-the-shelf components that can be made available to early customers or QA teams.

- “We must develop first hardware than firmware and then applications”: The application is what end users will interact with and is thus the most critical part of a system. Delaying the development of the application until the end is a recipe for disaster.

- “CI and automated testing doesn’t work in our context”: Regardless of how unique a team’s development flow is, there is always an automated process that can help reduce the workload.

- “We can’t reduce our lead times through CI”: Although we cannot reduce lead times, CI can enhance the efficiency of the development time we do have.

- “User stories are not for IoT devices”: “User stories are just one component of an agile development process. They can be replaced with something more suitable if necessary.

Useful links

What is DevOps in an Embedded Device Company?

Agile Embedded Software Development: The Best of Both Worlds

Build faster, healthier code with Embedded Continuous Integration