Technology

Problem

When displaying video back to the user, many robots and drones suffer from shaky camera footage. Often, gimbals take up too much room or power. Sundance looked for a solution to stabilise their footage on their robotics platform.

Solution

We proposed building a real-time digital image stabilisation on their robotics platform. On top of that we used BeetleboxCI to run tests to ensure the smoothness of the video.

Outcome

By using FPGA hardware-accelerated modules, we were able to achieve real-time video stabilisation at 1080p 30 FPS, with minimal latency. Our solution also enables real-time monitoring of the video stabilisation to ensure a consistent, smooth video is maintained.

“Beetlebox met two critical criteria for the edge. Firstly, it enabled heavy amounts of computation to run at lower power than conventional CPUs. It also ran the video stabilisation at a consistent, low latency, something that GPUs have traditionally struggled with.”

Pedro Machado

R&D Manager, Sundance Microprocessor Technology

Video Stabilisation Challenge

Sundance Microprocessor Technologies specialise in the production of Commercial-Off-The-Shelf boards for robotics and visual applications, many of which are powered through FPGAs.

Often, the cameras they deploy on robots and drones suffer from shaky footage caused by uneven terrain, high winds or motors. Gimbals are prohibitively large or consume too much power, and the cameras that Sundance uses have no built-in stabilisation features.

Partnering with us, Sundance wanted to develop a video stabilisation system that would run entirely on the boards. The technical challenge was to analyse high definition footage at 30 FPS without the user noticing any latency.

Meeting the challenge

Our solution was both able to provide the high-performance video analytics and the data analytics. We developed real-time electronic video stabilisation and achieve 1080p at 30 FPS, whilst minimising delay on the video.

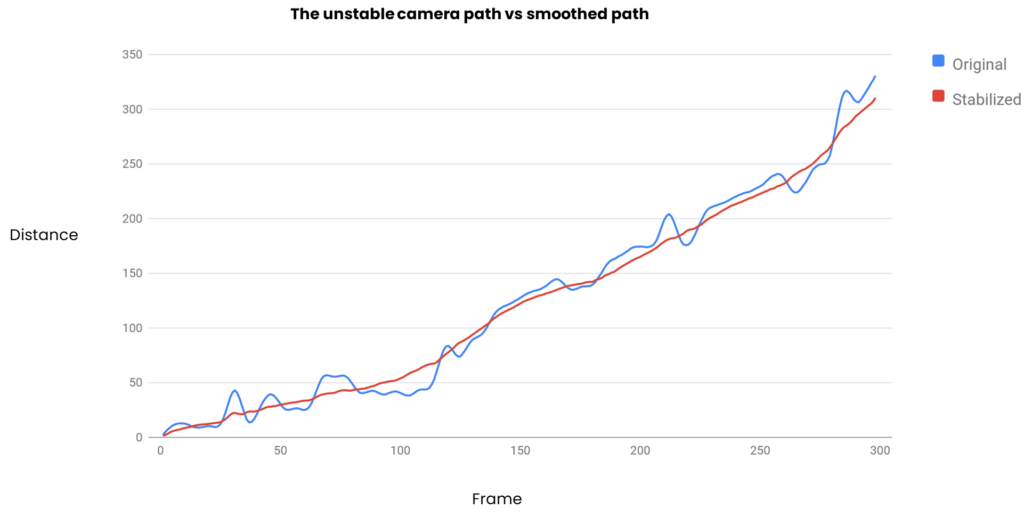

To perform video stabilisation, we first got an estimate of the movement of the camera, which we did by tracking static features in the video. For instance, we could detect movement of the mountain in the background from one frame to the next. Since mountains remain stationary, we knew any movement must have been caused by the camera shaking. We could then map these shakes onto a graph, plotting its movement.

The final step was to remove the shakes and create a smooth version of the movements in real-time. To ensure that the video remained smooth, we provided camera movement data.

Saving time with BeetleboxCI

We were also able to continuously deliver updates directly to our FPGA boards. This meant we could quickly and efficiently deliver new features and updates, ensuring that their products remain up-to-date.

Finally, running simulations on pre-set video files enabled us to automatically generate the camera movements, so anytime we made a change to our smoothing algorithm, we were able to quick; generate reports telling us, if an improvement had occured.

Looking Ahead

Through BeetleboxCI, we were able to build, test and monitor real-time video stabilisation running in high definition at 30 FPS. The next step for our partnership with Sundance will be porting this to their latest generation boards. Using BeetleboxCI, we will provide the same rapid iteration and reduced risk to the deployment of these robots.