Part 9: Running our code on the DPU

We now have our compiled model ready to run on our board. In this tutorial we will look at running our DPU and exploring the code that interacts with the DPU API.

- Introduction

- Getting Started

- Transforming Kaggle Data and Convolutional Neural Networks (CNNs)

- Training our Neural Network

- Optimising our CNN

- Converting and Freezing our CNN

- Quantising our graph

- Compiling our CNN

- Running our code on the DPU(Current)

- Conclusion Part 1: Improving Convolutional Neural Networks: The weaknesses of the MNIST based datasets and tips for improving poor datasets

- Conclusion Part 2: Sign Language Recognition: Hand Object detection using R-CNN and YOLO

The Sign Language MNIST Github

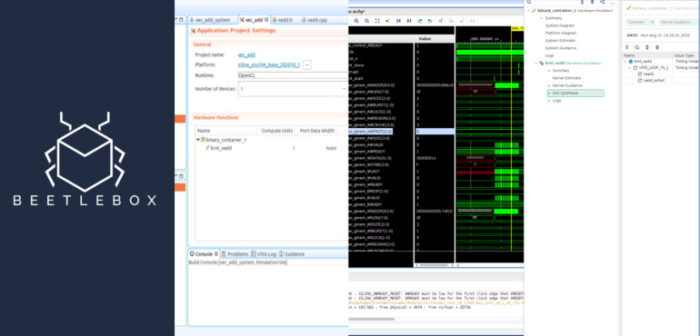

Recap of running our code on the FPGA board

This will be a quick recap on how to run our code on the FPGA board.

- Turn on the FPGA and access it through the UART port

- Through the UART port we can configure the settings of the FPGA to access the board via SSH;

ifconfig eth0 192.168.1.10 netmask 255.255.255.0

- We can then connect to the board as shown in the user guide

- Now we have the DPU on the FPGA fabric we need the relevant libraries to run it, which we can get through here. Transfer the package onto the FPGA via SCP:

scp <download-directory>/vitis-ai_v1.1_dnndk.tar.gz root@192.168.1.10:~/

- Then using the terminal through the board:

tar -xzvf vitis-ai_v1.1_dnndk.tar.gz cd vitis-ai_v1.1_dnndk ./install.sh

- We then need to transfer over the deploy folder

scp -r <Cloned-directory>/sign_language_mnist/deploy root@192.168.1.10:~/

- Finally, we can run the file:

cd sign_language_mnist/deploysource ./compile_shared.sh python3 sign_language_app.py -t 1 -b 1 -j /home/root/deploy/dpuv2_rundir/

The Sign Language App and the DPU API

The main file that we run on the board is the sign_language_app.py so let us take a look at its parameters:

- -j, –json Path of folder containing meta.json file. No default, must be supplied by user.

- -i, –image_dir Path of images folder. Default is ./images

- -c, –custom_dir Path of custom images folder. Default is ./custom_images

- -t, –threads Number of threads. Default is 1

- -b, –batchsize Input batchsize. Default is 1

The image_dir points to an image directory containing a sample of our test images to make sure that our CNN is still working on the board. The app will run through each of the files in the directory. Similarly, the app will also run through every file held in custom_dir, although this directory can be filled with any custom images that we want to try our CNN on. The meta.json file contains the runtime information about the DPU. The threads determines the number of threads to be run on the DPU whilst the batchsize determines the number of images to be fed in at any one time.

The sign_language_app.py will then call the runApp function, which creates a runner from runner.py:

dpu = runner.Runner(meta_json)

The python runner class handles the initialisation and the communication with the DPU API through six functions:

- def __init__(self, path)

- def get_input_tensors(self)

- def get_output_tensors(self)

- def get_tensor_format(self)

- def execute_async(self, inputs, outputs)

- def wait(self, job_id)

The roles of each function are self explanatory and most applications will follow the basic formula:

- Creating the runner: dpu = runner.Runner(meta_json)

- Execute the runner: job_id = dpu.execute_async(inputData,outputData)

- Wait for the runner to finish: dpu.wait(job_id)

- Post-process result

Once we have created the runner we pre-process the image in the same way we have been doing throughout the tutorial. By reshaping to fit the input node shape and then dividing by 255:

for i inrange(len(listImage)): image = cv2.imread(os.path.join(image_dir,listImage[i]), cv2.IMREAD_GRAYSCALE) image = image.reshape(28,28,1) image = image/255.0 img.append(image)

Afterwards we need to setup the threads to run the DPU in our particular case we have only setup one thread to run on the DPU. The optimal number of cores is dependent on the particular board setup and the number of cores available. A maximum number of four cores is available per DPU, but more cores consume more resources on the boards. To find optimal results try varying between 1-16 threads.

threadImages=int(len(img)/threadnum)+1

# set up the threads

for i inrange(threadnum):

startIdx = i*threadImages

if ( (len(listImage)-(i*threadImages)) > threadImages):

endIdx=(i+1)*threadImages

else:

endIdx=len(listImage)

t1 = threading.Thread(target=runDPU, args=(dpu,img[startIdx:endIdx],batchSize,results,i,threadImages))

threadAll.append(t1)

img is our pre-processed list of images. This divides the images up evenly between the threads and then creates the threads that will each individually run the runDPU function, which is where our runner is executed.

Now each individual thread has been allocated a set amount of images, it needs to execute them on the DPU. Each thread will attempt to feed in its allocated amount of images in batches to the DPU (in our case the batch size is 1):

inputTensors = dpu.get_input_tensors()

outputTensors = dpu.get_output_tensors()

tensorformat = dpu.get_tensor_format()

if tensorformat == dpu.TensorFormat.NCHW:

outputHeight = outputTensors[0].dims[2]

outputWidth = outputTensors[0].dims[3]

outputChannel = outputTensors[0].dims[1]

elif tensorformat == dpu.TensorFormat.NHWC:

outputHeight = outputTensors[0].dims[1]

outputWidth = outputTensors[0].dims[2]

outputChannel = outputTensors[0].dims[3]

else:

exit("Format error")

outputSize = outputHeight*outputWidth*outputChannel

We first fetch the dimension information from our runner class. Note that for our particular class we only have one input tensor and one output tensor so we always index 0 but in other CNNs we may have multiple input tensors we need to feed information to.

while remaining > 0:

if (remaining> batchSize):

runSize = batchSize

else:

runSize = remaining

remaining = remaining - batchSize

The thread then chunks its allocated images by batch size.

shapeIn = (runSize,) + tuple([inputTensors[0].dims[i] for i in range(inputTensors[0].ndims)][1:])

""" prepare batch input/output """ outputData = [] inputData = [] outputData.append(np.empty((runSize,outputSize), dtype = np.float32, order = 'C')) inputData.append(np.empty((shapeIn), dtype = np.float32, order = 'C'))

""" init input image to input buffer """ for j inrange(runSize): imageRun = inputData[0] imageRun[j,...] = img[(batchCount*batchSize)+j].reshape(inputTensors[0].dims[1],inputTensors[0].dims[2],inputTensors[0].dims[3])

We then create the input buffer by indexing a batch from the images.

""" run with batch """ job_id = dpu.execute_async(inputData,outputData) dpu.wait(job_id)

We then run our batch on the DPU and wait for it to finish.

""" calculate argmax over batch """ for j inrange(runSize): argmax = np.argmax(outputData[0][j]) results[(threadId*threadImages)+(batchCount*batchSize)+j] = argmax

Finally, we extract the class with the largest predicted probability by using the argmax function which is then saved into results which is then outputted back to runApp.

Our post processing in this case consists of estimating the time taken to run the app on the DPU as well as comparing the predictions against the ground truth to see if any of our results were wrong. Our final output, should be:

Throughput: 1067.21 FPS

Custom Image Predictions:

Custom Image: test_b Predictions: B

Custom Image: test_c Predictions: F

testimage_9.png Correct { Ground Truth: H Prediction: H }

testimage_6.png Correct { Ground Truth: L Prediction: L }

testimage_5.png Correct { Ground Truth: W Prediction: W }

testimage_1.png Correct { Ground Truth: F Prediction: F }

testimage_2.png Correct { Ground Truth: L Prediction: L }

testimage_7.png Correct { Ground Truth: P Prediction: P }

testimage_4.png Correct { Ground Truth: D Prediction: D }

testimage_3.png Correct { Ground Truth: A Prediction: A }

testimage_0.png Correct { Ground Truth: G Prediction: G }

testimage_8.png Correct { Ground Truth: D Prediction: D }

Correct: 10 Wrong: 0 Accuracy: 100.00 %

We have successfully designed, trained and run a CNN for our FPGA and looked at each step in detail to examine what is going on. In our final part we will be concluding this series, looking at the limitations and how we could potentially improve our CNN. We will also look at how this tutorial differs from more practical deployments of AI and what other architectures could be good for general sign language recognition.