Part 1: Introduction

An updated version of this tutorial that utilities Tensorflow 2 and Vitis AI 1.4 can be found here.

This the first part in our multi-part tutorial on using Vitis AI with Tensorflow and Keras. Other parts of the tutorial can be found here:

- Introduction (here)

- Getting Started

- Transforming Kaggle Data and Convolutional Neural Networks (CNNs)

- Training the neural network

- Optimising our neural network

- Converting and Freezing our CNN

- Quanitising our CNN

- Compiling our CNN

- Running our code on the DPU

- Conclusion Part 1: Improving Convolutional Neural Networks: The weaknesses of the MNIST based datasets and tips for improving poor datasets

- Conclusion Part 2: Sign Language Recognition: Hand Object detection using R-CNN and YOLO

Our Sign Language MNIST Github

Have you heard about running AI on FPGAs but not sure how to get started? Wondering what the benefits of deploying your model on a FPGA over a GPU is or how FPGAs even run Neural Networks? Here at Beetlebox we have provided a tutorial series to explain all aspects of AI on FPGAs through a practical hands-on example with all code available as open source.

The advancement of AI was triggered by the cheap availability of high-performance GPUs allowing for Deep Neural Networks to be trained in reasonable time periods as well as being able to run models in real time. In the drive for higher accuracy, new Neural Networks went deeper with more and more layers, running on more powerful GPUs. At the same time, there was also demand to make Neural Networks small enough to run on embedded devices such as smart phones without sacrificing accuracy. Along with newer smaller Neural Network architectures, new techniques emerged that could take existing architectures and reduce their size, such as quantisation and pruning.

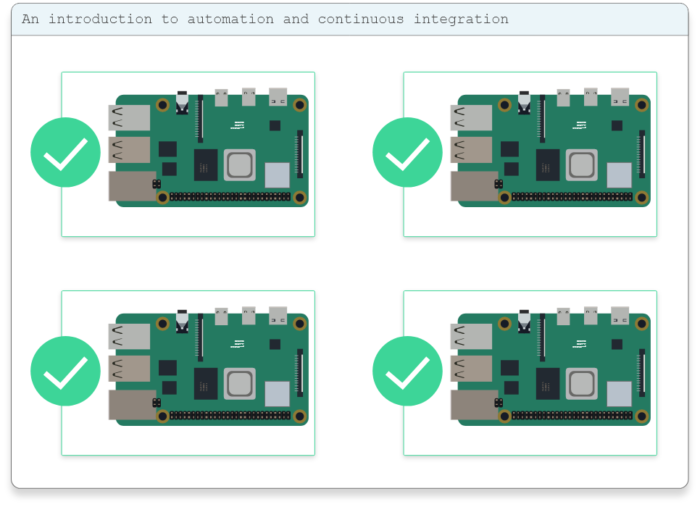

These new techniques though could not run optimally on GPUs, yet were found to run well on FPGAs. FPGAs were out-competing GPUs in terms of power consumption without sacrificing performance. To get these performance advantages though previously would require a team of hardware experts experienced in both Deep Learning and FPGA design, which is unrealistic for the majority of companies.

With the new release of Vitis from Xilinx. We are now seeing a fundamental shift from FPGAs being restricted to Hardware Experts to new tools making them accessible for software engineers and data scientists, but where to get started?

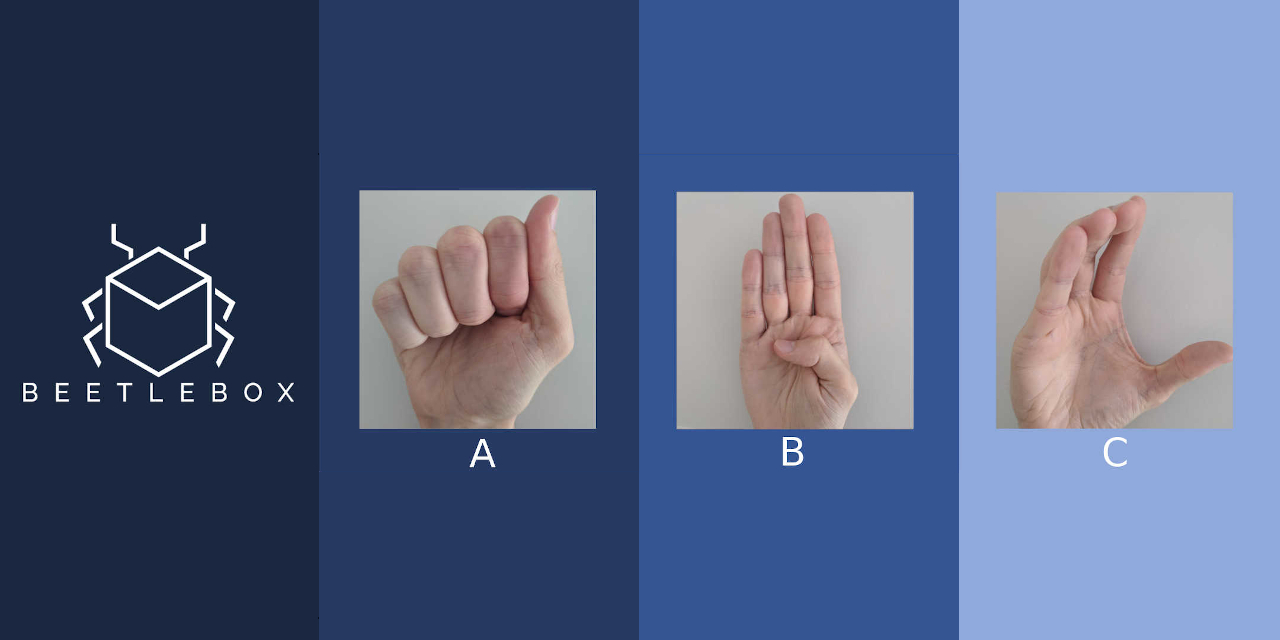

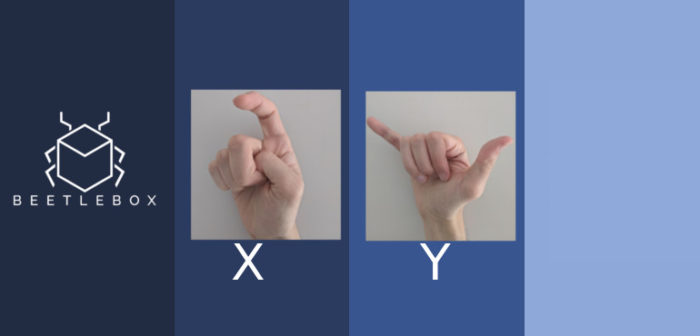

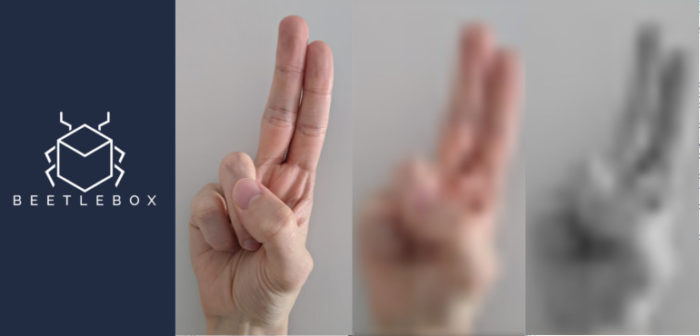

Here at Beetlebox we have made a new tutorial, which will both cover the theoretical and practical side of developing AI on FPGAs. We will be using Xilinx’s Vitis AI toolset, which allows us to deploy models from TensorFlow and Keras straight onto FPGAs. We will be using the Sign Language MNIST from Kaggle as it is a small enough model to train using CPUs only. We also wish to encourage embedded devices to become more accessible through AI and hope this tutorial will lead others to see what is possible. All code is open source and is released under the Apache 2.0 licence.

Over the next tutorials we will be going through the entire process from training to deployment, explaining why we are applying certain techniques and what Vitis AI is doing. We will be covering subjects such as:

- The difference between training and inference

- What quantisation is and why it is so beneficial for FPGAs

- What pruning is and why we wish to apply it

- The architecture behind Vitis AI and how it works

We will be explaining these subjects from a practical angle through our Sign Language MNIST example, showing how we can apply the techniques to develop optimal models on FPGAs. If you would like to get immediately going, all code and explanations on how to run it are available on our github page. Through this tutorial we hope to form a launch pad for those looking to get involved with AI on FPGAs.

well explained about neural networks and CNN , good work and keep updating us for more new knowledge. I loved your blog and it is interesting to read.